|

|

|

|

SPI x4 - Quadruple SPI throughput

Sending faster and more using less

The SPI interconnection model is ubiquitous and almost any micro-controller out

there will support it in hardware. The SPI data transfer can be fast, very

fast. In fact, it can be too fast for a micro-controller to handle. But how

fast is too fast you ask? Well, rather slow, it turns out.The problem at hand was a SPI daisy-chain of 53 devices, each requiring 8 data-bytes. Each device sends the data received out again with an 8-character delay(*). The total data amount is 424 bytes (3392 bits) of data for each update. The update rate must not be below 100 frames per second, requiring a minimum data rate of 42.4 kByte/s (339.2 kBit/s). There are several modes of operation when using SPI, as seen from the micro-controller's perspective:

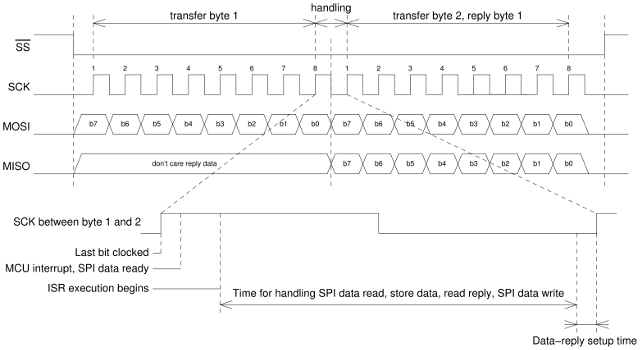

The second and third cases are much more complicated to handle. Especially when you have to consider a zero-latency reply. For the second case, if we assume that the first byte received is a command-byte, then the reply is a calculated response to that command. It also means that DMA will not help very much because the reply data depends on which command was issued. The third case is only different to the second in that it sends a different reply. The timing of the micro-controller does not change significantly and it still has to receive the data, store it, prepare a response and send the response. The timing of these cases is shown below:  Figure 1: Standard SPI timing (click to enlarge; drawing as PDF)

It is assumed that the SPI master is a capable device. The timing of the master

is such that it operates using non-stop transfers and bytes are sent/received

at the master without intermediary delays. One such an example is the SPI

interface of the Raspberry Pi, which will send heaps and heaps of data as fast

as the SPI clock allows.The bottleneck of the transfer is with the limited time to read the data, prepare a response and send the response. All of this has to be done within one SPI clock-cycle. The inter-character delay is limited to one SPI clock-cycle. This sets a limit on the maximum speed that can be achieved. The micro-controller must do all of its work within that one tiny SPI clock-cycle. Effectively, the SPI clock is limited to the micro-controller's software timing to handle the SPI request. Increasing the SPI clock is not possible, even though the micro-controller's SPI-interface happily can operate at higher frequencies. However, the micro-controller is unable to handle the data in the short time given. An obvious solution would be to increase the inter-character delay by stretching the SPI clock between transfers. But finding a SPI master controller with such an option is a very difficult if not impossible job. Most SPI controllers are made to be fast and simple. Especially the simple attribute is important. It takes very little hardware to implement the SPI shift-register. Adding top-level control for inter-character delay is expensive. The project at hand (53 daisy-chained devices) has devices with a cheap PIC micro-controller. The firmware was initially written in C and it turned out that the handling of the data took somewhere between 7 µs and 8 µs. The SPI clock could be set at a maximum of 125 kHz without causing problems of drop-outs. A serious round of optimization in assembly reduced the firmware's timing to under 4 µs, allowing a SPI clock at just over 250 kHz. Even an optimized version of the firmware cannot resolve the timing requirements. The SPI clock needs to be at least 340 kHz to meet the required frame-rate. The firmware leaves not much room for improvement. The best case scenario would be:

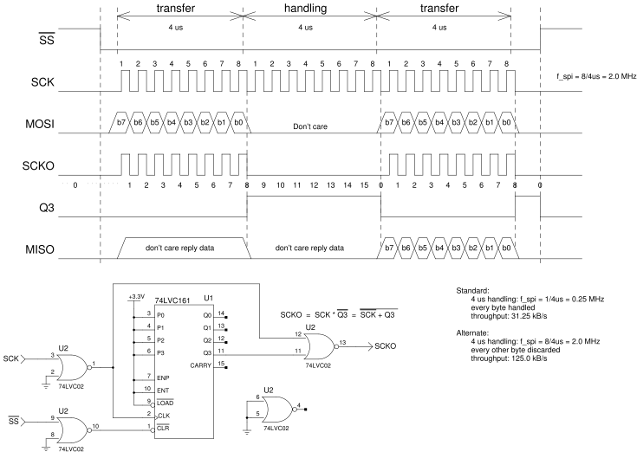

The solution to the problem is to increase the inter-character delay. Make the SPI wait between characters so that the micro-controller can process the data before the SPI resumes. But the SPI master does not support an inserted delay at the hardware level. Doing so in software is a sketchy proposition at best and needlessly uses CPU resources for hardware timing at worst.  Figure 2: Interleaved SPI timing (click to enlarge; drawing as PDF)

Enter the hardware arena. Let the software send twice the amount of data at

eight times the speed and get an improved throughput by a factor of four.Figure 2 above adds an inter-character delay by disabling the SPI clock for exactly one transfer. The SPI slave's clock input is connected to the SCKO signal. The SPI master will send two bytes and the slave will only see one byte. The effect is that the slave micro-controller has one whole character time to process the SPI data, prepare a response and send the reply. The inter-character delay is increased from less than one SPI clock-cycle to slightly under 8 SPI clock-cycles. Therefore, the SPI clock frequency may be increased by a factor of eight. The SPI master's clock frequency is set such that one character transfer (8 bits) equals the amount of time needed by the slave to process the SPI data and handle the reply. For the example at hand, the (semi-) optimized timing lies around 4 µs, which means a SPI clock period of 500 ns, or 2 MHz SPI clock frequency. However, the amount of data send from the master doubles, because it must create an extra eight clock-pulses, but still effectively increasing the throughput by a factor of four. The master has to send dummy data every other byte and will also receive dummy data every other byte. But this is a small price to pay for a four-fold increase of throughput. The added hardware consists of a 4-bit synchronous counter with asynchronous reset, two inverters and a NOR-gate. The inverters are emulated using NOR-gates so that only two chips are required for the whole setup. The SPI clock to the slave is blocked whenever the counter is at count 8...15 (counter's Q3 is high). The slave select (/SS) signal serves as a safety net, where it will reset the counter to zero whenever the slave is deselected. The NOR-gates will introduce a small propagation delay to the clock (around 10..14 ns), but that is insignificant to the system. The SPI clock is more than a order of magnitude away and the data is stable at the correct edge. The added harware is geared for use in SPI mode 0. Other modes can be accommodated with some changes. It is left to the reader to do so if necessary. For the daisy-chain example of 53 devices, this solves the problem of the frame-rate adequately. The frame-rate, at maximum SPI frequency of 2 MHz, is now ~294.8 frames/s, which is well above the required minimum of 100. In fact, the micro-controllers on the slaves now have plenty of time to process the data and the main code is interrupted over a shorter period of time. The SPI frequency may even be lowered to reduce energy consumption and ease a bit on possible transmission line problems (even though all devices contain drivers and impedance matching on the SPI lines). Note: You may think to do SPI

daisy-chaining with no character delay. That would be faster, actually a lot

faster, because the micro-controller would not need to create a reply. The

reply would be implicitly equal with the data just received. However, for each

device to know which data it should extract from the stream is a totally

different matter. Each device suddenly needs to know where in the chain

it is located or at least have a unique address to filter the stream. That

requires each device to be different in setup, which is a lot of work in and of

itself. Better to have all devices exactly the same.

Posted: 2015-07-07 |

| Overengineering @ request | Prutsen & Pielen since 1982 |